- AI Software

- C3 AI Applications

- C3 AI Applications Overview

- C3 AI Anti-Money Laundering

- C3 AI Cash Management

- C3 AI CRM

- C3 AI Decision Advantage

- C3 AI Demand Forecasting

- C3 AI Energy Management

- C3 AI ESG

- C3 AI Intelligence Analysis

- C3 AI Inventory Optimization

- C3 AI Process Optimization

- C3 AI Production Schedule Optimization

- C3 AI Property Appraisal

- C3 AI Readiness

- C3 AI Reliability

- C3 AI Smart Lending

- C3 AI Sourcing Optimization

- C3 AI Supply Network Risk

- C3 AI Turnaround Optimization

- C3 AI Platform

- C3 Generative AI

- Get Started with a C3 AI Pilot

- Industries

- Customers

- Resources

- Generative AI

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can Generative AI’s Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- C3 Generative AI: Getting the Most Out of Enterprise Data

- The Key to Generative AI Adoption: ‘Trusted, Reliable, Safe Answers’

- Generative AI in Healthcare: The Opportunity for Medical Device Manufacturers

- Generative AI in Healthcare: The End of Administrative Burdens for Workers

- Generative AI for the Department of Defense: The Power of Instant Insights

- What is Enterprise AI?

- Machine Learning

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download eBook

- All Resources

- C3 AI Live

- Publications

- Customer Viewpoints

- Blog

- Glossary

- Developer Portal

- Generative AI

- News

- Company

- Contact Us

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Problem Scope and Timeframes

- Cross-Functional Teams

- Getting Started by Visualizing Data

- Common Prototyping Problem – Information Leakage

- Common Prototyping Problem – Bias

- Pressure-Test Model Results by Visualizing Them

- Model the Impact to the Business Process

- Model Interpretability Is Critical to Driving Adoption

- Ensuring Algorithm Robustness

- Planning for Risk Reviews and Audits

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download e-Book

- Machine Learning Glossary

Tuning a Machine Learning Model

Regularization

Regularization is a method to balance overfitting and underfitting a model during training. Both overfitting and underfitting are problems that ultimately cause poor predictions on new data.

Overfitting occurs when a machine learning model is tuned to learn the noise in the data rather than the patterns or trends in the data. Models are frequently overfit when there are a small number of training samples relative to the flexibility or complexity of the model. Such a model is considered to have high variance or low bias. A supervised model that is overfit will typically perform well on data the model was trained on but perform poorly on data the model has not seen before.

Underfitting occurs when the machine learning model does not capture variations in the data – where the variations in data are not caused by noise. Such a model is considered to have high bias, or low variance. A supervised model that is underfit will typically perform poorly on both data the model was trained on, and on data the model has not seen before. Examples of overfitting, underfitting, and a good balanced model, are shown in the following figure.

Figure 14 Regularization helps to balance variance and bias during model training.

Regularization is a technique to adjust how closely a model is trained to fit historical data. One way to apply regularization is by adding a parameter that penalizes the loss function when the tuned model is overfit. This allows use of regularization as a parameter that affects how closely the model is trained to fit historical data. More regularization prevents overfitting, while less regularization prevents underfitting. Balancing the regularization parameter helps find a good tradeoff between bias and variance.

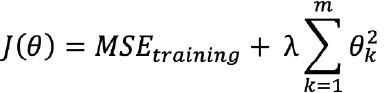

Regularization is incorporated into model training by adding a regularization term to the loss function, as shown by the loss function example that follows. This regularization term can be understood as penalizing the complexity of the model.

Recall that we defined the machine learning model to predict outcomes Yem> based on input features X as Y = f(X).

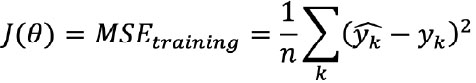

And we defined a loss function J(θ) for model training as the mean squared error (MSE):

One type of regularization (L2 regularization) that can be applied to such a loss function with regularization parameter λ is:

Where yk represents a model prediction, yk represents an actual value, there are n data points, and m features.