- AI Software

- C3 AI Applications

- C3 AI Applications Overview

- C3 AI Anti-Money Laundering

- C3 AI Cash Management

- C3 AI CRM

- C3 AI Decision Advantage

- C3 AI Demand Forecasting

- C3 AI Energy Management

- C3 AI ESG

- C3 AI Intelligence Analysis

- C3 AI Inventory Optimization

- C3 AI Process Optimization

- C3 AI Production Schedule Optimization

- C3 AI Property Appraisal

- C3 AI Readiness

- C3 AI Reliability

- C3 AI Smart Lending

- C3 AI Sourcing Optimization

- C3 AI Supply Network Risk

- C3 AI Turnaround Optimization

- C3 AI Platform

- C3 Generative AI

- Get Started with a C3 AI Pilot

- Industries

- Customers

- Resources

- Generative AI

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can Generative AI’s Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- C3 Generative AI: Getting the Most Out of Enterprise Data

- The Key to Generative AI Adoption: ‘Trusted, Reliable, Safe Answers’

- Generative AI in Healthcare: The Opportunity for Medical Device Manufacturers

- Generative AI in Healthcare: The End of Administrative Burdens for Workers

- Generative AI for the Department of Defense: The Power of Instant Insights

- What is Enterprise AI?

- Machine Learning

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download eBook

- All Resources

- C3 AI Live

- Publications

- Customer Viewpoints

- Blog

- Glossary

- Developer Portal

- Generative AI

- News

- Company

- Contact Us

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Problem Scope and Timeframes

- Cross-Functional Teams

- Getting Started by Visualizing Data

- Common Prototyping Problem – Information Leakage

- Common Prototyping Problem – Bias

- Pressure-Test Model Results by Visualizing Them

- Model the Impact to the Business Process

- Model Interpretability Is Critical to Driving Adoption

- Ensuring Algorithm Robustness

- Planning for Risk Reviews and Audits

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download e-Book

- Machine Learning Glossary

Best Practices in Prototyping

Pressure-Test Model Results by Visualizing Them

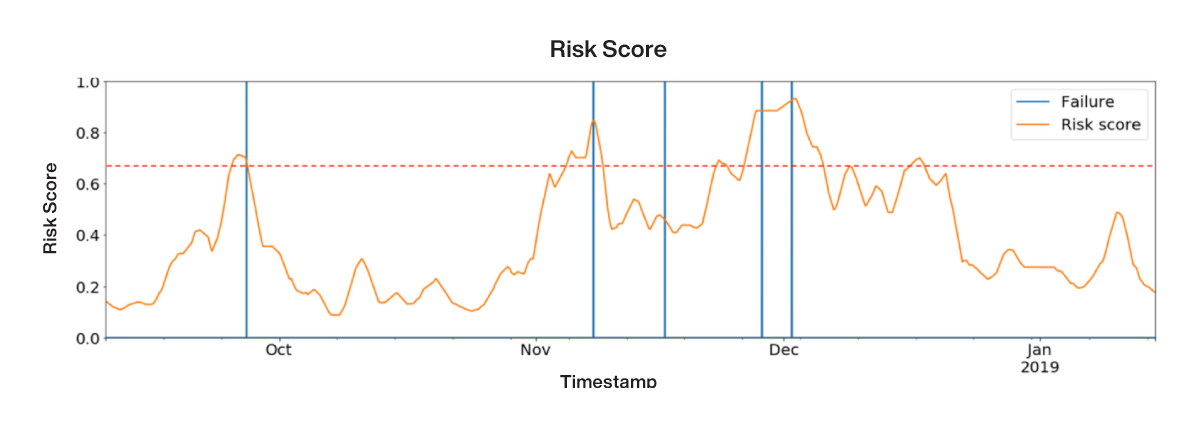

Data scientists will often focus, rightly, on aggregate model performance metrics like ROC curves and F1 scores, as discussed in the article “Evaluating Model Performance” in this guide. However, model performance metrics only tell part of the story; in fact, they can obfuscate problematic model issues like information leakage and bias, as discussed above. Managers should recognize that complex AI problems require nuanced approaches to model evaluation.

Imagine that you are a customer relationship manager and your team has given you a model that predicts customer attrition with very high precision and recall. You may be excited to use the results from this model to call on at-risk clients. When you see the daily model outputs, however, they don’t seem to change much; in fact, you receive calls from your customers that they are abandoning your business. In each case, you check the AI predictions and see that the attrition risk values are extremely high on the day the customers call you, but extremely low on the preceding days. The data scientists have indeed given you a model that has very high precision and recall but it has zero actionability. In this case, you need sufficient advance warning in order to save those customers. While this is an extreme example – and usually the formulation of the AI/ML problem would seek to perform the prediction with sufficient advanced notice – the visualization of risk scores is still extremely valuable.

Visually Inspect Model Results

To combat this potential problem before deploying models into production, we recommend a visual inspection of example interim model results similar to the visual inspections performed on the data inputs discussed above.

A commonly used technique that we recommend involves producing model outputs that mimic or simulate how actions and business processes are likely to occur once the model is deployed in production.

For example, if a team is attempting to predict customer attrition, it is imperative to visually inspect attrition risk scores over time; if the model is indeed useful, it will show a rising risk score with sufficient advance warning to enable the business to act. For models like this, practitioners can also make sure that risk scores are indeed changing over time – in other words, customers are potentially low risk for a long period of time and then risk scores rise as they grow increasingly dissatisfied about such things such as interactions with their financial advisor, economic returns, costs incurred, or available products and services.

We recommend following a similar approach to what was used during the data visualization phase of the prototype work – building out different visualizations and individual case charts, socializing these among the team and experts, printing out physical copies, and placing them prominently to encourage interaction, collaboration, and problem solving.

In the example in the following figure, the predicted risk score is visualized in orange. A score of 1.0 corresponds to 100 percent likelihood of customer attrition. The blue vertical lines represent true examples of attrition.

By visually inspecting plots of model predictions over time, we can see how the models change and evaluate their effectiveness in real-life situations.

Figure 34: Example output of a trained machine learning model to predict customer attrition (orange) overlaid with true attrition labels (blue)